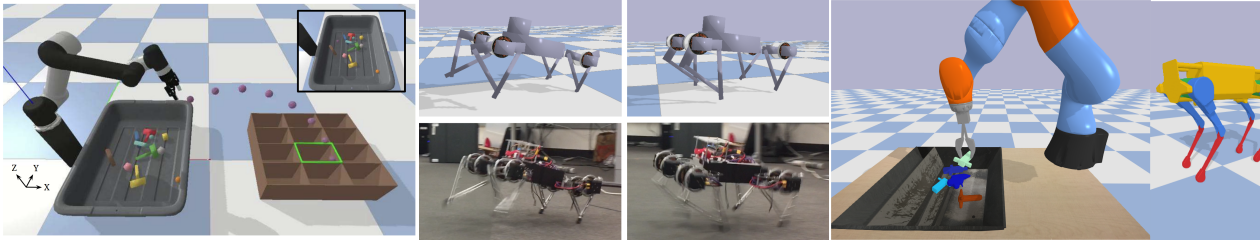

We are developing a new differentiable simulator for robotics learning, called Tiny Differentiable Simulator, or TDS. The simulator allows for hybrid simulation with neural networks. It allows different automatic differentiation backends, for forward and reverse mode gradients. TDS can be trained using Deep Reinforcement Learning, or using Gradient based optimization (for example LFBGS). In addition, the simulator can be entirely run on CUDA for fast rollouts, in combination with Augmented Random Search. This allows for 1 million simulation steps per second.

TDS is used in a couple of research papers, such as the ICRA 2021 NeuralSim: Augmenting Differentiable Simulators with Neural Networks”, by Eric Heiden, David Millard, Erwin Coumans, Yizhou Sheng and Gaurav S. Sukhatme.

You can checkout the source code here:

https://github.com/google-research/tiny-differentiable-simulator